Latency is the Achilles heel when it comes to anything on the internet. Honestly, how long would someone want to wait for their content to load? Whether it’s images, videos, audio or files, people don’t want to wait for their content — and if everyone wants the same thing at the same time, latency is going to suffer.

If you had a glass of water, you could easily suck from a straw and get a good sip, and it wouldn’t take long to get to the bottom. But if my glass was shared by someone else with a straw, I wouldn’t get as much, and we’d have to refill the glass so we could both get the same amount as you did without a shared glass.

That slowdown and refilling of data, is latency. It’s taking longer to get the full data transfer because of congestion, computing power, file size or type, or a variety of other factors. But it’s just simply slower.

That’s what content-delivery networks (CDNs) aim to solve.

What is a CDN?

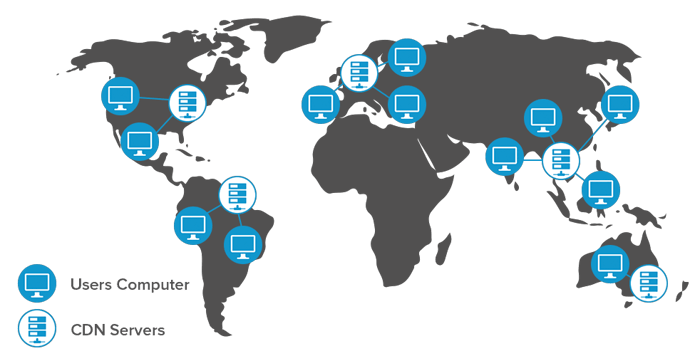

In the early days of the internet, CDNs were created to share the load, and create independent servers for everyone to access the same information. This started with Web pages, images and basic multimedia files which were duplicated on servers around the world, allowing users anywhere to cut down delivery time. As the web grew so too did CDNs and their capabilities to deliver all sorts of web content, HTML, CSS, files, etc.

Overall, CDN infrastructure was built for handling files, storing them, moving them, and serving them.

How Did CDNs Begin to Deliver Video?

When internet video emerged onto the scene, it was initially in the form of files that you could download and then playback locally. Progressive download video soon followed, where you could begin the video playback before the entire file was downloaded. When Real Networks and Microsoft Windows Media Server launched, they employed proprietary protocols and utilized UDP to enable the streaming of video data, instead of downloading it; while Flash videos burst to the scene using Real Time Media Protocol (RTMP).

To help with video delivery, CDNs began to license technology from all three major streaming vendors (Real, Microsoft, Macromedia), running their services within their networks to enable video. However, licenses were not cheap, and the third-party proprietary software between the CDN and the user motivated the CDN to push forward a new solution that utilized the existing HTTP infrastructure investment.

CDNs collaborated to standardize on HTTP-based streaming, initially forcing Adobe to release HTTP Dynamic Streaming (HDS) to allow the use of CDN infrastructure. When Apple released its HTTP Live Streaming (HLS) specification, the world adopted it because it was cheap, standards-based and scalable.

With protocols like HLS and HDS, video streams are composed of many small file snippets (chunks) played back in sequence. This approach is ideal for video-on-demand (like Netflix, Hulu and others) services, but when employed for live-streaming, they require large client-side buffers which increases viewer latency (lag behind real-time of sometimes more than 30 seconds).

Can CDN’s Support Real-Time Video?

The simple answer is not today, although a lot of work is being done to support emerging protocols and transfer methods.

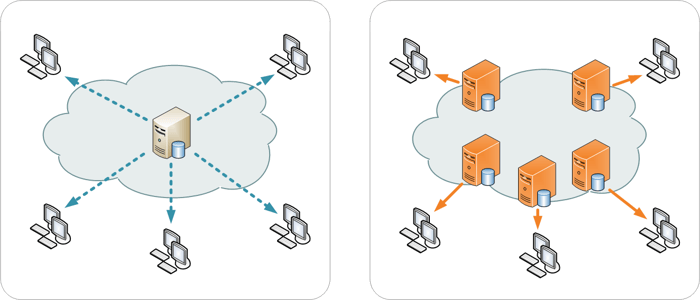

With HTTP delivery and current CDN architectures, streams are much like a game of rugby. While throwing the ball to someone else, they must have complete possession (written to disk) of the ball (data) before passing it sideways or backwards onto the next teammate (server or client), or else you risk fumbling it (dropping packets).

HTTP delivery requires that video chunks be delivered in sequence, and if the network experiences slowdowns or blockage, it requests the data from the next server. While eventually the video gets to the client, the laterals from server to server end up slowing down the entire stream compared to the real-time action. This could even be more complicated when viewing across different devices that require different bitrates, player support or native applications.

How does Phenix Differ?

In short, Phenix isn’t a traditional CDN from an architectural standpoint, and it doesn’t leverage HTTP delivery.

Instead, Phenix built its global infrastructure based on Real Time Communications for the Web (WebRTC), with the idea that the end-to-end latency should be less than 1/2 second. To do this, you need a significantly different approach and a fundamentally different architecture.

Nothing can be written to disk; it takes too long. Everything must be ready to stream, and transcoding must be completed once before streaming out to end users; per user transcoding causes major issues in terms of resource utilization.

The other industry innovation that Phenix has taken full advantage of is dynamic resource allocation from Cloud Infrastructure providers and edge computing. Every Phenix service can be provisioned in real-time to meet spontaneous demand, sometimes called the “thundering herd,” but only uses the extra compute power when it’s needed.

This is new since the inception of CDNs and allows Phenix to start and grow with its customers without requiring an expensive infrastructure build-out. Dynamic scaling also allows the licensed version of Phenix to be deployed within existing cloud environments to enable the creation and selling of video-based services across existing networks.

Lastly, Phenix leverages its patented SyncWatch technology to ensure that every device around the world gets the exact same stream at the same time. HTTP delivery won’t allow for that because chunks experience hang-ups between devices, servers, networks, and at the origin.